@generalpha

Prompt

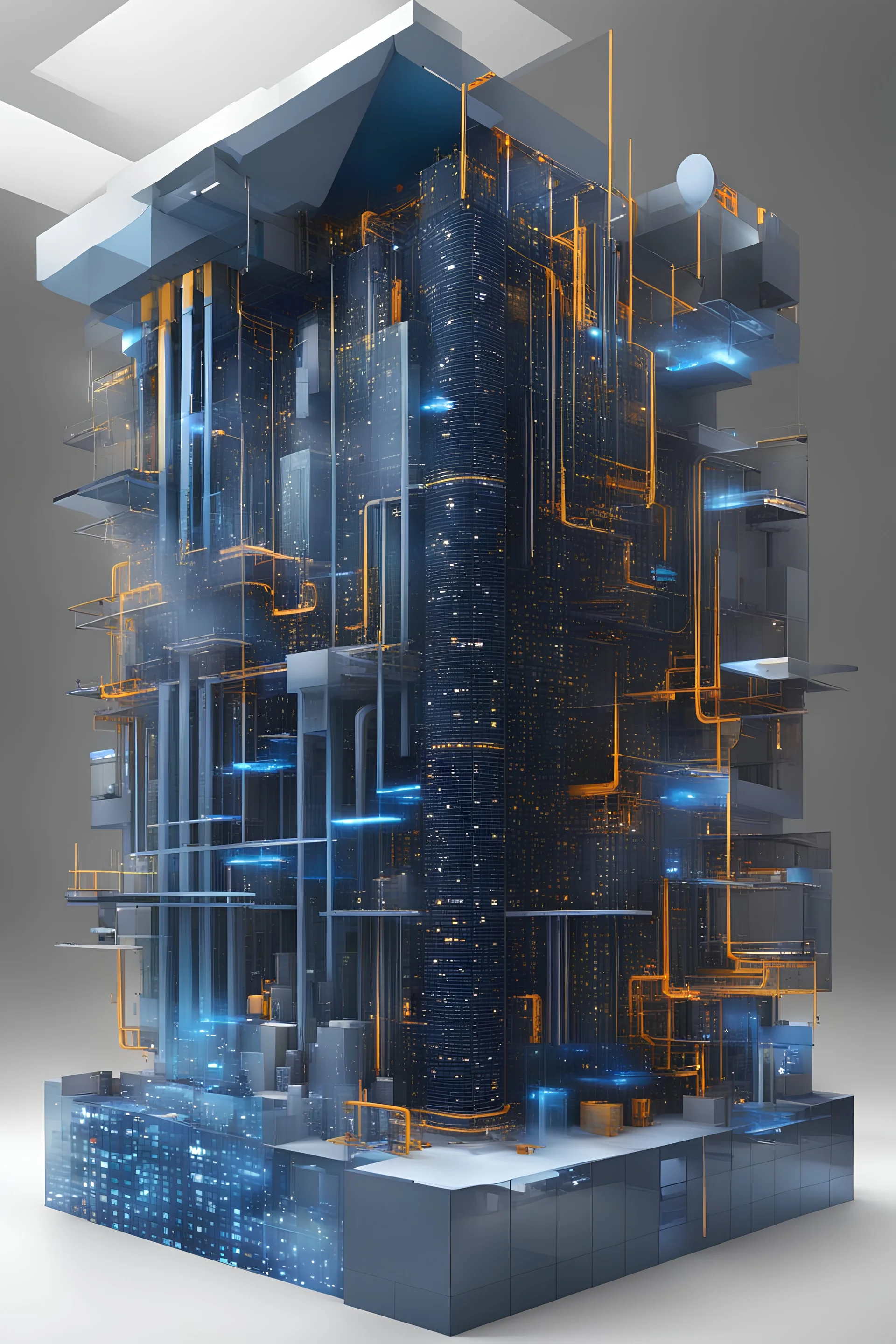

In the context of universal approximation, two approaches can achieve similar results but with different parameter requirements. The overall system comprises data, architecture, and a loss function, interconnected by a learning procedure. Responsibilities within the system include acknowledging noisy or biased data, addressing the need for a large number of parameters in the architecture, and overcoming the principal-agent problem in the choice of the loss function. To resolve these challenges,

doubles, twins, entangled fingers, Worst Quality, ugly, ugly face, watermarks, undetailed, unrealistic, double limbs, worst hands, worst body, Disfigured, double, twin, dialog, book, multiple fingers, deformed, deformity, ugliness, poorly drawn face, extra_limb, extra limbs, bad hands, wrong hands, poorly drawn hands, messy drawing, cropped head, bad anatomy, lowres, extra digit, fewer digit, worst quality, low quality, jpeg artifacts, watermark, missing fingers, cropped, poorly drawn

2 years ago

Model

SSD-1B

Guidance Scale

7

Dimensions

3328 × 4992

![[mahematics] In the context of universal approximation, two approaches can achieve similar results but with different parameter requirements. The overall system comprises data, architecture, and a loss function, interconnected by a learning procedure. Responsibilities within the system include acknowledging noisy or biased data, addressing the need for a large number of parameters in the architecture, and overcoming the principal-agent problem in the choice of the loss function.](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vNzE2NTdhMmYtNWI1Ny00MGM5LWFmMzgtODIxNTNjNDA5NmJiLmpwZWc.webp)

![digital echoes and virtual realms, Juliette and Romeo's fateful connection transcends the boundaries of a high-tech electronic universe. Juliette, lost in sorrow, weeps in a holographic simulation as Romeo's lifeless avatar rests upon a glowing data tomb, their love immortalized in lines of code. [William S. Burroughs' "The Electronic Revolution"] The curse woven into their digital DNA dictates that Juliette, in her grief, will unknowingly trigger a fatal algorithm, linking her fate to Romeo i](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vNWE0ZmY0NTktYmFlNi00NWYzLWIwMjItOTM2NzgzZGM4OGVmLmpwZWc.webp)

![[Tilt-Shift Photography] Cobalt crystals and voltage regulators emerged, bell-like fungal caps obscuring underlying transistor arrangements. The central CPU took on the quality of a sculptural ruin beneath its shroud of rhizomorphs. Cracked chips ringed it like miniature ruins, exposed bond wires bonded in delicate gold. Mushrooms peeked from slots and etched grooves, waving as from fairy-scale windows. Fibrous roots stretched in community between blurred banks of memory and vanishing fiber opti](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vYmRhODcyN2ItNmRlYS00MzgwLTllYWQtZGY4OGZmZTc4NWIwLmpwZWc.webp)

![[3d isometric view complemented by diagrams and technical blueprints]](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vY2RlOWVmNWUtMDI3NS00M2Q0LWJiOTgtNTcwMzY5NzkxMzc5LmpwZWc.webp)

![[mahematics] In the context of universal approximation, two approaches can achieve similar results but with different parameter requirements. The overall system comprises data, architecture, and a loss function, interconnected by a learning procedure. Responsibilities within the system include acknowledging noisy or biased data, addressing the need for a large number of parameters in the architecture, and overcoming the principal-agent problem in the choice of the loss function.](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vZDJmYjgwZDgtMTQxZC00MmZiLTkwZTktODZmNWRkMzMyYjI2LmpwZWc.webp)

![[Tilt-shift photography psychedelic glitched acid trip under steroid] The landscape was a vast network of metal and silicon, resembling a motherboard, with pathways spreading like veins across the system. Electricity surged through these circuits, each serving distinct functions like carrying commands, data, and power, all converging towards central hubs of control. The motherboard pulsed with quiet authority, guiding the flow of information. In the distance, towering structures loomed, represen](https://img.stablecog.com/insecure/256w/aHR0cHM6Ly9iLnN0YWJsZWNvZy5jb20vYjVkYmI0YmYtZGRmZC00YzNiLThmMjctNjFmM2E1MGJkNjdkLmpwZWc.webp)